One technique that saves LLM training

Gradient Checkpointing—A trade-off between Computations and Memory

Introduction

If you have ever trained an LLM or even tried fine-tuning an LLM, then you know how computationally intensive and memory-consuming these training jobs are.

Storing the billions of parameters while training blows off even the high-end GPUs, making it impossible to train them unless you use this simple trick. Gradient Checkpointing.

Why Gradient Checkpointing?

When you train a deep learning model, the model goes through the usual forward pass and backpropagation and stores the activation in between.

But when we have billions or even trillions of parameters, storing these parameters is impossible in many high-end GPUs, and training models like GPT or PaLM would run out of memory.

So, how do you solve the memory problem? Just store it, as simple as that.

How does Gradient Checkpointing help in training LLMs?

Training an LLM is a trade-off between computation and memory.

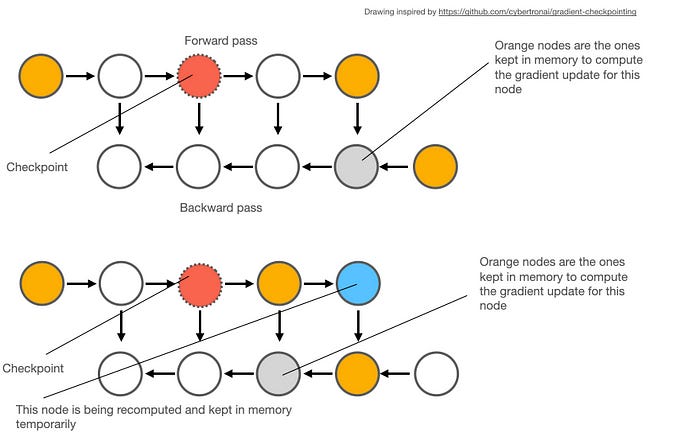

In deep learning, during the forward pass, a neural network processes the input data layer by layer, generating intermediate activations (the outputs of each layer). These activations are usually stored in memory because they are needed later during backpropagation to compute gradients and update weights.

However, in very large models (like LLMs), storing all these activations can consume too much GPU memory, resulting in an out-of-memory issue.

Gradient Checkpointing is a memory-saving trick. Here’s how it works:

Instead of saving every activation during the forward pass, it saves only a few key checkpoints (e.g., every N layers).

During backpropagation, it recomputes the missing intermediate activations from these checkpoints instead of retrieving them from memory.

This means you end up trading more computation (due to recomputing) for less memory usage, thus allowing you to train much larger models without running out of GPU memory.

It’s like saving only a few landmarks on a hiking trail and re-walking short sections later if you need to remember the exact route, rather than recording every single step.

Conclusion

The success of LLM depends on various optimization techniques, which are broadly not remembered or even talked about. Gradient Checkpointing is one such optimization, which makes all the GPTs and LLama’s to be successful.